Install & Run: http://dev.docs.grakn.ai/docs/running-grakn/install-and-run

[2] Grakn 2.0.0-alpha is only accessible and documented through our "staging" documentation website (dev.docs.grakn.ai) right now. The main documentation (docs.grakn.ai) and website (grakn.ai) will continue serving Grakn 1.8.4 until we release Grakn 2.0.0 for production.

[3] Workbase is not yet compatible with Grakn 2.0.0-alpha, and will be coming very soon.

[4] Reasoner will be enabled in the coming week(s).

[5] Performances of all queries are not final yet. For example, sorting and grouping on match queries are still implemented naively.

[6] Pay attention to the nightly releases of Grakn, Graql, Client Java, Client Python and Client Node.js as we'll be frequently submitting patches throughout this Alpha phase.

Grakn 2.0 Alpha: our 2020 (engineering) year-in-review

When we kicked-off 2020, we were so excited to launch Grakn Cosmos: Grakn's first global conference, held here in London in the first week of February. It was an intense start to the year, but we learnt so much about how our community was using Grakn to solve some of the most complex problems in their industries. We met with our community members from Life Sciences, Defence & Security, Financial Services, Robotics, and many other sectors. From financial analytics to drug discovery, cyber threat detection to robotics disaster recovery, Grakn was being used to tackle a higher order of complexity in data and knowledge, and it inspired all of us at Grakn Labs.

We genuinely believe the technologies built by our community can truly change the world one day. However, we also learnt an important lesson from the community that week: Grakn was not fast enough, and we needed to scale the performance.

We were deeply moved by our community's works and how they were impacting their respective domains. We knew that we had to do something big to overcome this massive hurdle that's holding everyone back, and make it the priority of 2020 - no excuses.

And so we took the best of our design, engineering, mathematics, and computer science, from our work over the past five years - just the parts that we know our community loved - and we re-wrote Grakn from scratch [1]. We rebuilt Grakn with an entirely new architecture of best practices in Distributed Systems and Computer Science.

But most importantly, we rebuilt Grakn with a new mindset. One that would set the trajectory of our work for decades to come.

[1] About the only thing we kept from the old Grakn's codebase was our test framework, which was essential to guarantee stability and no regression. So we kept it and extended it by multiple folds.

We find it crucial that we share with the community the decisions that went into Grakn 2.0, hence the length of this post. We'll publish our benchmarking figures very soon, and the points below explain how and why you will see multiple orders of magnitude in performance improvements. The points below also explain why we needed to rewrite the entire Grakn codebase. Grakn 2.0 is effectively a newly written Grakn, with the old test framework migrated and extended to guarantee stability does not regress.

As this is an "ALPHA" release, we would like to remind our community that this is not a production release. We expect to release a production-ready Grakn 2.0 end of this month (end of January 2021) after we go through a series of planned stress-testing and benchmarking regime.

In the meantime, we'd like to ask the community to help us stress test the new Grakn, and submit any bugs to us as "issues" on GitHub (through the most relevant repository) following the issue template to fill in the steps that can help us reproduce them.

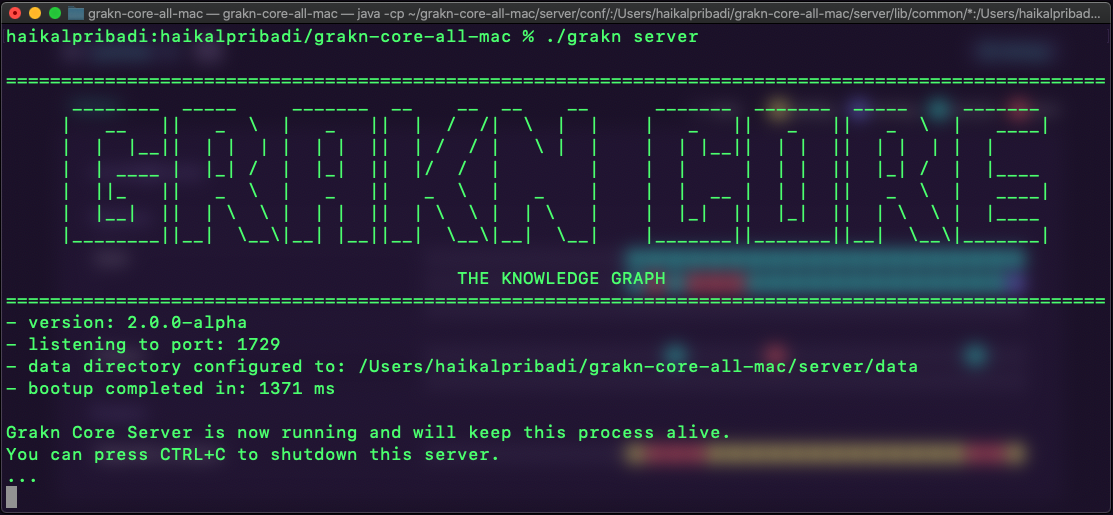

To install and start using Grakn 2.0.0-alpha, visit our "staging" documentation website at dev.docs.grakn.ai, and visit Install & Run. [2][3][4][5][6]

[2] Grakn 2.0.0-alpha is only accessible and documented through our "staging" documentation website (dev.docs.grakn.ai) right now. The main documentation (docs.grakn.ai) and website (grakn.ai) will continue serving Grakn 1.8.4 until we release Grakn 2.0.0 for production.

[3] Workbase is not yet compatible with Grakn 2.0.0-alpha, and will be coming very soon.

[4] Reasoner will be enabled in the coming week(s).

[5] Performances of all queries are not final yet. For example, sorting and grouping on match queries are still implemented naively.

[6] Pay attention to the nightly releases of Grakn, Graql, Client Java, Client Python and Client Node.js as we'll be frequently submitting patches throughout this Alpha phase.

We tried refactoring ...

At the start of 2020, Grakn exclusively depended on JanusGraph as our graph storage engine, and TinkerPop/Gremlin as the (internal) graph query language. JanusGraph itself was built on top of Cassandra as the Key-Value storage engine. There has been great work that went into JanusGraph by their community (which we're hugely thankful for). However, we knew all along that JanusGraph was not the right storage for the type of computation that Grakn's knowledge representation and reasoning system needed to perform.

We always planned that when performance becamse the bottleneck, we’d “open the bonnet”, and refactor and optimise the JanusGraph codebase to fit our usage. So, after Cosmos, we spent some time understanding the JanusGraph source code, but we quickly grasped the complexity of the codebase. However, most of the code in JanusGraph, which includes TinkerPop/Gremlin implementation, was written for purposes that don’t serve any of our needs in Grakn. And the effort to untangle it did not seem feasible.

That was the moment when we realised the right thing to do was to build our own graph storage engine - one that was custom-built for our knowledge representation system, tailored to our hypergraph data structures, and will forever be fully in our control.

1) Replaced Cassandra with RocksDB

If we're going to replace JanusGraph graph, we might as well ask: should we keep Cassandra as the key-value storage? What's the fastest key-value storage we should use to build Grakn? This research quickly leads us to RocksDB, a powerful open-source, low-level key-value storage engine, built by the engineering team at Facebook. RocksDB is an embedded storage engine, commonly used to build higher-level databases. It's written entirely in C++ for max performance, where "keys" and "values" are simply arbitrarily-sized byte arrays, easily suitable for storing multiple terabytes of data in a single server. It's optimised for fast, low latency storage, and it exploits the full potential of high read/write rates offered by flash or RAM - exactly what we want. Beyond Facebook, RocksDB has been widely adopted among established companies. RocksDB has been proven to be a scalable, embedded storage engine for numerous other database technologies.

2) New Graph Storage Engine: replacing JanusGraph

Using RocksDB as our key-value storage, we built a new graph storage engine, custom-built for Grakn's knowledge representation system, tailored to our hypergraph data structure. The graph is still a "directed binary graph", but it is made of 2 co-existing graphs: the "schema graph" and the "data graph". The schema graph serves as a constraint to the data graph. Both graphs manage data that sits in RAM, storage, and any movement in between, transparently. You can consider these graphs as a hybrid of in-memory and persisted graph. The schema graph is unique in that it always lives in memory for as long as it can. Both graphs are thread-safe and designed for concurrent usage by frameworks that sit above it.

3) New Grakn Type System: our Knowledge Representation

Now that we have our own graph storage engine, tailored to our hypergraph data structures, we had the opportunity to rewrite our knowledge representation system. This is the system that governs the types you define in your schema, verifies the correctness of your data, encodes the logical representation of persisted data, and serves as the foundation to the reasoning system. You can see this as Grakn's "type system", and we were able to rebuild it to be much more expressive, simpler and performant.

We extended the previous type system with more expressive type inheritance: role type inheritance and overriding attribute types and role types. We simplified the system by making role types scoped to their relation types: naming roles is now more straightforward and more user friendly. We introduced type-safety restrictions. We introduced a new system for exception handling, which provides much more helpful error messages to guide you in designing your schema. Last but not least, we rebuilt the type system to be thread-safe and always live in memory.

4) New Graql: even simpler and more powerful

As a result of the change in our type system, Graql also needed to be changed. We kept the grammar almost entirely, especially in data queries, but we considerably improved the schema language. We introduced more expressive features, such as inheriting and overriding attribute types and role types, to reflect the type system's new features. However, we also introduced changes that significantly simplify your schema, such as "scoping role types to their relation types", making the Graql language even easier to use. Under-the-hood, we rebuilt Graql to natively have a graph data structure and be semantically logical - which made implementing everything else in Grakn so much easier. You should review full changelog in the Graql 2.0.0-alpha release notes.

5) New Traversal Engine: replacing TinkerPop/Gremlin

TinkerPop/Gremlin is a graph computing framework that allows graph storage engines, such as JanusGraph, to be queried using TinkerPop's graph query language: Gremlin. For the first five years, Grakn has relied on TinkerPop/Gremlin to query the binary graph in which our knowledge representation system stored its data. Graql would be translated through our reasoning engine and eventually hit JanusGraph with a Gremlin query. We'll always be hugely thankful to TinkerPop for enabling us to prove some fundamental questions in building a knowledge graph and reasoning engine.

However, now that we have our very own graph storage engine, we had the incredible opportunity to write our own traversal engine, that heavily optimises the traversal algorithms to our native hypergraph data structures. This is natively parallelised to exploit the graph storage being thread-safe.

6) New Query Planner: an Integer Linear Program

Having our own storage engine also meant that we had the freedom to create and store various kinds of statistics/metadata about the data stored in the graph, categorised by the types in the schema that describes those data. All without affecting the performance of the storage engine. This opened a new opportunity for us: we can now perform a thrifty/non-greedy optimisation that considers the possible query plans more globally, through a "Mathematical Optimisation/Programming" algorithm commonly known as "Integer Linear Programming".

Given a query that a user writes, there are hundreds or thousands of possible execution paths to get the answers. Most of them would be too costly for the traversal engine to execute as they produce millions of permutations in the execution. Few of those paths would be efficient paths to traverse, and the difference could be multiple orders of magnitude in traversal performance. This is an inherent problem with databases, especially graph databases, as they expose the ability to query multiple degrees of relationships. If you leave it to the developer to determine the most efficient traversal execution path, this would be a very challenging task.

Integer Linear Programming algorithms allow us to create constraints that determine the bounds of a valid solution, and a goal function to optimise the solution with. The metadata about the graph that we now can store, enables us to generate goal functions that are aware of the cost of different parts of the graph upon which a query would traverse. This allows Grakn to transparently determine the most "globally optimal" path to execute the query, while the developer is left to determine the constraints that the query needs to satisfy.

7) New Reasoning Engine: based on Event Loop + Actor Model

[4] We are in the midst of completing the new reasoning engine and it's not yet enabled. It will be enabled in the coming week(s) through one of the 2.0.0-alpha nightly releases, and will certainly be completed for 2.0.0 production release.

With a thread-safe graph storage and traversal engine designed for concurrent usage, again, we had the opportunity to redesign how Grakn's reasoning engine works. Inspired by JavaScript's Event Loop and Akka's Actor Model we built our own Event Loop and Actor Model libraries. The event loop is a concurrent framework that collects events and executes their code in parallel. It is different from most concurrent frameworks in that it is non-blocking, zero-context-switching and asynchronous by nature, which allows it to have much higher throughput. The actor model is also a concurrent framework - one that allows concurrent computation be written through the model of an "actor": a computational entity that can receive/send messages from/to other actors that result in the actor executing/designating a computational task.

So we rewrote our reasoning engine to be natively modelled as concurrent actors, powered by our event loop. By representing reasoning operations as actors, we not only unlock massive scalability of computation, we are also able to reuse the results of computations, such as inferences and inference explanations. Streamlining the reasoning data structures also simplified the computational model, which resulted in fewer logical edge cases, more cachable states, and allowed us to add new features far more quickly. We also introduced a "type resolver", which reasons over a Graql query, to validate user-written rules and prune the ones that are not relevant to the user's query.

8) New Query Engine: an Asynchronous Producer-Consumer

Previously, a Graql query gets executed mostly single-threaded. Now, the graph traversal and reasoning engine are both parallelised. How can we leverage them (in tandem) in our query engine?

The answer was to introduce a higher level concurrent framework: an asynchronous Producer-Consumer framework. A producer-consumer pattern is a common pattern in distributed systems, where one or more concurrent jobs produces data into a queue, and one or more concurrent jobs read from the queue. In the case of Grakn: we saw the opportunity to use this pattern to allow concurrent computation of a query to produce "answers" into a queue, and the server consumes those answers to return to the client. We also ensured that the concurrent jobs be non-blocking and run asynchronously to achieve maximum throughput.

Then we modelled the two computations, the graph traversal and reasoner (which itself leverages graph traversal), to be "producer" computations. Each computation can run concurrent jobs to produce answers to a queue. Both are inherently parallelised as explained above, and now both can run together in one producer-consumer framework: the query engine. Now every query will try to leverage the CPU resource as much as possible. [4]

[5] Performances of all queries are not final yet. For example, sorting and grouping on match queries are still implemented naively.

9) New Client-Server Protocol: a Reactive Stream

With the server performance scaled, we need to ensure the client-server communication was not a bottleneck. We want the client application to leverage the server's asynchronous parallel computation to receive as many answers as possible, as soon as they are ready. However, we don't want the client application to be overwhelmed with server responses. So, we needed some form of "back-pressure". However, to maintain maximum throughput, everything had to be non-blocking. Sounds familiar? Well, it's the "reactive stream" problem.

We took inspiration from Java Flow and Akka Stream, and built our own reactive stream over GRPC, as lightweight as possible, with our unique optimisations. When an application sends a query from the client to the server, a (configurable) batch of asynchronously computed answers will immediately be streamed from the server to the client. This reduces network roundtrips and increases throughput. Once the first batch is consumed, the client will request another batch. We remove waiting time between the first and second batch, by predicting that duration and streaming back surplus answers for a period of that duration, at the end of every batch. This allows us to maintain a continuous stream of answers at maximum throughput, without overflowing the application.

We then hit the max limit of responses GRPC can send per second. So the last trick was to bundle multiple query answers into a single server RPC "response". The impact on query response time was negligible, but it dramatically increased answer throughput again.

The new client architecture and Protobuf definitions are also hugely simplified to ease the developers' effort to build their own client libraries.

10) New Grakn Cluster: a Raft-based distributed Grakn

Grakn Cluster (previously called Grakn KGMS) is an extended version of Grakn that runs multiple database servers as one cluster, to provide scalability and high-availability for production deployments. Without Cassandra, we now need an alternative strategy to scale Grakn as a cluster. Naturally, we considered leveraging TiKV: a distributed key-value storage, also built using RocksDB. However, we needed something lower-level and more performant to scale our graph storage engine. So we built our own distributed storage using RocksDB.

We considered the two established distribution protocols: Paxos and Raft. Both have the same theoretical performance profile, but Raft is significantly simpler and more elegant. Using the same Event Loop and Actor Model libraries from Grakn Core (which actually originated from Grakn Cluster), we modelled the core Raft algorithm itself as a finite-state machine. The Raft algorithm is asynchronous by nature, but we ensured our implementation had no locks and mutexes, and we built Grakn Cluster as a fully event-driven and non-blocking system. Data replication was implemented through message-passing using ZeroMQ, which aligned with our asynchronous architecture.

The ultimate challenge was: how do you test an asynchronous distributed system, that is non-deterministic by nature? Inspired by Apple's FoundationDB team on how they simulated their distributed cluster, we built our own Raft "simulation" framework, which allows us to run deterministic and repeatable simulations of an entire distributed cluster. If a bug is observed in a Raft simulation of cluster of size n and time t, then we can repeat the simulation with the same seed and configuration, to reproduce the bug.

11) New Grakn Console: powered by PicoCLI + JLine

We've also rebuilt the Grakn Console. We use PicoCLI to enable more expressive CLI utilities, allowing us to build a richer and user-friendly console environment for Grakn. We use JLine to build an interactive console environment, which provides multiline editing, console command history, and many more features that help with the development in console. This change sets up the ecosystem for us to continue extending our Grakn Console with new features in a cohesive and backwards-compatible way.

The new Grakn Console provides two levels of interaction: database-level and transaction-level interfaces. The database-level interface is the first level of interaction, i.e. first-level REPL. From one of the database-level commands, you can open a transaction to the database. This will open a transaction-level interface, i.e. second-level REPL. You should review full changelog in the Grakn Console 2.0.0-alpha release notes.

12) New Benchmarking System: an Agent-based Simulation

There are two goals of our benchmarking system: to produce a meaningful complex network of data that are interrelated with each other so we can perform meaningful complex queries against it, and to produce comparable benchmarks between two different database technologies. To achieve these goals, we built an agent-based system that simulates the creation of a virtual world.

An "agent-based" system is a simulation that uses a "micro-scale model" to simulate concurrent operations and interactions of multiple low-level "agents" in an attempt to recreate and imitate a high-level, complex phenomenon. In our case, the complex phenomenon we're simulating is the world: people are born in cities, cities are located in countries, people getting married, people going to school, people going to work at companies, companies created in countries, and so on. Each agent will take on a single one of these tasks, not knowing any other agents and the broader scope of the simulation system. As each agent runs in parallel over multiple iterations, complex networks of entities and relations emerge in the graph produced by the simulation.

The simulation allows us to control the density of interrelationships, as well as the number of iterations it will run to. This gives us control over the scale we want to benchmark. The agent-based model also allows us to curate a diverse set of database operations, used to implement different agents, ensuring we test as many features of the database being benchmarked as we can. But most importantly, the agents form a higher-level abstraction of responsibility that can be implemented with different database operations, when benchmarking different database technologies. Each of the database operations may not be comparable, like-for-like, across two different database technologies. However, the performance of one type of agent implemented across two different technologies remains directly comparable.

13) New Grabl CI/CD: replacing CircleCI

Since the end of 2018, we were already vigilant in ensuring everything that can be tested or automated in CI/CD, would be tested or automated in CI/CD. By the end of 2019, we ended up with an end-to-end CI/CD pipeline on CircleCI, covering everything from unit tests, integration tests, assembly tests and deployment tests, to release verification and deployment. However, this came with a lot of infrastructure complexity. We had servers in Heroku to coordinate the CD workflow on CircleCI - it was kind of a hack that CircleCI was not meant to do. We had static-code analysis done on SonarCloud, and lots of scripts in Heroku to automate triaging issues on GitHub. We had a server to automate the upgrading of repository dependencies, and a server to run benchmarking jobs in the cloud. We had to use Zipkin to do performance tracing, and used ElasticSearch to store and visualise the benchmarking data. We were manually running benchmarking jobs in Google Cloud, and automating the benchmarking of multiple servers as a cluster was not an option. Managing credentials across all these platforms was also a nightmare.

However, all these tasks are common to engineering teams building any substantially complex system. Why are they not automated by one system? And that's how Grabl was born.

First and foremost, Grabl is built to automate the workflow of the Grakn Labs engineering team (hence the name: "Grakn's Builder"). This is the sole focus of Grabl for now, even though we see Grabl being applicable to any software engineering team in the future. We wanted a system that was beyond CI/CD; we wanted a system that covers the entire spectrum of the software development lifecycle, which includes teams building distributed systems and work across multiple repositories.

So for starters, we built Grabl to automate two types of pipelines: [6]

- Build pipeline: to automate workflows for every "build" of the source code. This pipeline will run for every commit on a repository.

- Release pipeline: to automate workflows for every "release" of the repository. This pipeline can be triggered at the end of a build pipeline of every commit, if the "verification" workflow passes.

[7] Soon there will be a third pipeline: a "sync dependencies" pipeline, that will automate the job of upgrading every repository whenever there's a (valid and) new version of a dependency. This will propagate automated upgrades from one repository to another across the organisation.

The build pipeline is comprised of three workflows:

- Quality workflow: automate jobs that produce source code analysis data, and does not affect the correctness of the build. For example, we automate the SonarCloud source code analysis job in this workflow, as well as our "dependency analysis" job to evaluate whether our dependencies are up-to-date.

- Correctness workflow: automate typical CI jobs that determine the correctness of the source code, a.k.a. tests: unit tests, integration tests, assembly tests, deployment tests, and so on. Only jobs in this workflow will update the commit status on GitHub.

- Performance workflow: automate jobs that run benchmarks. These jobs tend to be long-running, depend on each other, interact with one another, and produce benchmarking data.

With a single commit that you push to GitHub, we analyse the quality of our codebase, run all the tests that determine the correctness, produce benchmarking data to analyse performance. There's zero developer intervention in between.

The release pipeline is comprised of three workflows:

- Validation workflow: automate jobs to verify whether a commit can be a valid release candidate. This workflow runs on every commit. If this workflow and the build pipeline passes, the release button will be enabled for admins to trigger.

- Deployment workflow: automate the jobs of deploying the software to the distribution platforms.

- Broadcast workflow: automate the jobs of updating systems in your ecosystem of the new release you have just completed.

Every single commit you push to GitHub can be a valid release candidate, if the validation workflow says so. With a single click of approval, the release deployment and broadcasting runs.

A job is a unit of work that the user defines by providing the "command" script, and parameters to configure the virtual machine it will run on. There can be "foreground" or "background" jobs. The jobs can depend on each other, be aware of each other's IP address, and can interact with one another. You can also configure the images and resources of the virtual machine that runs the job.

We designed the UX of Grabl such that jobs, workflows, and pipelines are easily monitored across all repositories in an organisation, and all pages are updated in real-time as the automation runs in the cloud. This level of organisation-wide visibility was key to the vision of how we want Grabl to serve our team.

We also built a performance tracing system into Grabl, and the UI to visualise the benchmarking data. We can analyse the "traces" of performance benchmarking tasks, nested hierarchy among themselves, macro-level statistical patterns of the benchmarks, micro-level data of each operation, comparisons between 2 commits, and even comparisons between benchmarks of two different database technologies. You'll get to see more of Grabl Benchmarking features as we publish Grakn 2.0.0 benchmarking reports in the near future.

It's still early days for Grabl, but we now have a platform that can encompass the entire spectrum of the software development lifecycle, and we can extend it to automate anything that we want at Grakn Labs.

Beyond all the features we've mentioned, there's one more key point that makes Grabl vital: Grabl is built entirely using Grakn. It is our very own power-user of Grakn that we developed ourselves, and it serves a key purpose in our team: Grabl puts us in the shoes of our users developing with Grakn, and creates a strong feedback loop to Grakn's core development.

That just about summarises 2020 for our engineering team at Grakn Labs. We are truly proud of the tremendous work that the team has accomplished, and we're hugely thankful for the support of everyone in our community. We hope everyone is excited for what Grakn 2.0 will deliver to all of us this year.

Stay tuned for Grakn 2.0.0 production release very soon, and then our benchmark reports soon after.

Onwards and Upwards!